We all know what it feels like to be tethered to technology these days. It’s not just our kids – it’s the rest of us as well. Probably one of the biggest hooks is the smartphone. As of 2016, there were about 2.1 billion smartphones worldwide. Considering there are about 7.6 billion people on the planet, that’s a LOT of smartphones! Then there’s social media. Facebook alone has over 2 billion users. Many people worry about smartphone addiction or screen addiction. We all know how we can get sucked into compulsively checking our smartphones, especially to text or use social media. Some people are “news junkies” who always seem to need their next news “fix.” In previous blogs, I discussed how we are drawn to screens to get our needs met. Also, I blogged about how both classical conditioning and supernormal stimuli can compel us to check our screens. There’s another mechanism through which we get sucked into our devices. Variable reinforcement and screens is that third mechanism that is the topic of this blog.

- Slot Machines Are An Example Of Which Type Of Reinforcement Schedule

- Slot Machines Are An Example Of Which Type Of Reinforcement Definition

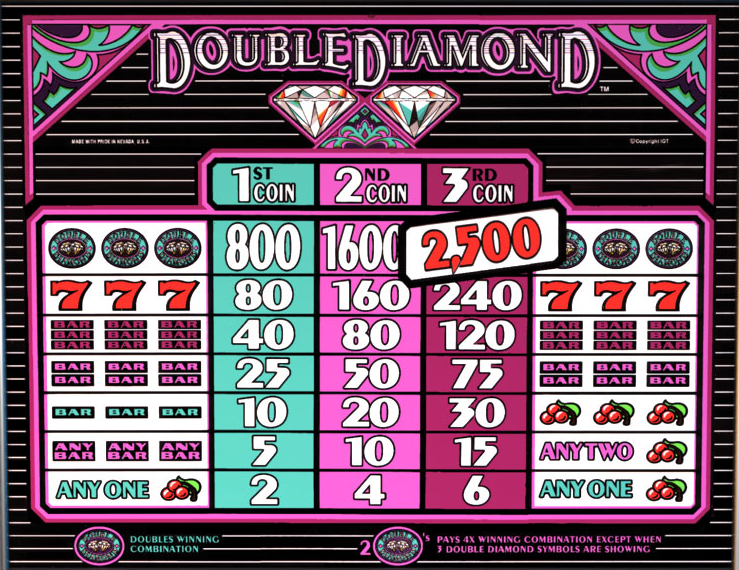

Slot machine manufactures are well aware of the reinforcing power of a win, even if its small and ever so often. They use a type of reinforcement schedule in order to encourage gamblers to continue playing even if they are not reinforced with each pull of the machine.

- But the type of near miss just described does exists on most slots.Independent Testing Lab: I am pleased to report that the near-miss concept went out with the 80s. In fact, near-miss games simply dont exist in North America, period. (Maida, 1997).Regulators: Ontario bans Near Misses in 14.1 and allows the type of near misses described.

- We are asked to determine that gambling at a slot machine is an example of which reinforcement schedule. Let us see our given choices one by one. We know that in fixed ratio schedule, reinforcement is delivered after the completion of a number of responses. An example of fixed ratio is a reward to every 6th response.

Technology Isn’t Bad

As I’ve said before, technologies such as smartphones, video games, and social media aren’t inherently bad. They provide countless benefits. If they didn’t, we wouldn’t use them! We like to use various technologies because they can meet our psychological needs for relatedness, autonomy, and competence. In a sense, the many practical, entertainment, and social reasons for using smartphones ultimately involve getting our psychological needs met. However, a curious thing happens with our technologies. We start to use them in a compulsive way that often starts to resemble an addiction.

Smartphone Addiction

The way we feel the need to check our phones begins to seem like that smoker who starts jonesing for a cigarette. We just HAVE to check our smartphone. We begin to check our phones so frequently that it interferes with our relationships, productivity, and our safety. Why would do we keep checking our smartphones so compulsively? Why is it so hard to disengage from them and leave them in our purses, pockets, or another room? One particular mechanism that appears to be involved that can explain this powerful hook: the variable reinforcement schedule.

A Little About Reinforcement Schedules

If you ever took an introductory psychology course, chances are you ran across B.F. Skinner. He was a psychologist and behaviorist who looked at how behavioral responses were established and strengthened by different schedules of reinforcement. For instance, a rat in a cage that is taught to press a lever to earn a food pellet (reward) might be taught that it gets one food pellet for every 3 presses of the lever. This would be an example of a fixed interval reinforcement schedule.

Although there are a variety of types and subtypes of reinforcement schedules that can affect the likelihood of different behavioral responses, I’m going to briefly discuss the variable ratio reinforcement schedule.

Variable Ratio Reinforcement Schedule

A variable ratio reinforcement schedule occurs when, after X number of actions, a certain reward is achieved. Using the rat example, the rat doesn’t know how many presses of the lever produces the food pellet. Sometimes it is 1, others it is 5, or 15…it never knows. It soon learns that the faster it pushes the lever though, the sooner it will receive the pellet. Researchers have found that variables ratio schedules tend to result in a high rate of responding (refer to the VR line in the graph above). Also, variable ratios are extremely resistant to extinction. In the case of the rat, if the researchers stops giving pellets of food after lever presses, the rat will push the lever frequently for a very long time until it finally gives up (which is the extinction part). Slot machines are a real world example of a variable ratio.

Variable Reinforcement in Our Daily Lives

It turns out, variable ratio reinforcement schedules are involved in many behavioral addictions, such as gambling. Yes, that’s right. In a sense, compulsively checking our phones is much like compulsive gambling. In fact, many “obsessions” and hobbies also involve this variable ratio reinforcement schedule, such as:

- Fishing

- Hunting

- Basically any type of collecting (e.g., collecting Pokemon cards, stamps)

- Looking for bargains while shopping at the mall, flea markets, or garage sales

- Channel surfing on TV (seems that Internet surfing has largely supplanted that past time)

Why Are Variable Reinforcement Schedules Powerful?

Variable reinforcement schedules are NOT bad. There are a way that we learn. They can be very powerful. We learn casual relationships from “connecting the dots.” From an evolutionary perspective, learning causal connections enhances our chances of survival. For instance, if I do “Action A” then “B” is the likely result. When there is a variable relationship, that means when we perform “Action A” then “B” might be the result. The reward system in the brain releases dopamine in fairly large amounts in variable situations to motivate the organism to pay attention so that it might learn the causal connection. In essence, the brain is trying to “crack the code.” When the relationship between two stimuli is variable, then the reward center of the brain keeps releases dopamine so that we can try to figure out the connection.

Variable Reinforcement and Technology

It is easy to see how technologies such as social media, texting, and gaming work on a variable reinforcement schedule. Like a box of chocolate, we never know what we are going to get. Who posted to Facebook? What did they post? Who commented on my post? What did they say? My cell is buzzing – what could this be about? I wonder if Trump tweeted something crazy today?

The moment our smartphones buzz or chime, this reward system is activated. Importantly, it is the anticipation phase that is key to the activation of this reward system. We just HAVE to find out this information, whatever it is. It feels like an itch that needs to scratched or a thirst that needs to be quenched. Variable reinforcement and screens form a powerful combination. I my next blog, I’ll discuss the brain and tech addiction in a bit more detail.

Slot Machines Are An Example Of Which Type Of Reinforcement Schedule

The Rat in Your Slot Machine: Reinforcement SchedulesBlog || Politics || Philosophy || Science || Fiction || Quotes

When gamblers tug at the lever of a slot machine, it is programmed to reward them just often enough and in just the right amount so as to reinforce the lever-pulling behavior - to keep them putting money in. Its effect is so powerful that it even overrides the conscious knowledge most players have that in the long run, the machines are programmed to make net profit off of customers, not give money out.

Slot machine designers know a lot about human behavior, and how it is influenced by experience (learning). They are required by law to give out on average a certain percentage of the amount put in over time (say 90% payout), but the schedule on which a slot machine's reinforcement is delivered is very carefully programmed in and planned (mainly small and somewhat randomly interspersed payoffs). Interestingly, this effective type of reinforcement schedule originally comes from studies with non-human animals.

When you put rats in a box with a lever, you can set up various contingencies such that pressing the lever releases food to them. You could release food based on a fixed ratio of lever presses (every 10 presses drops some food), or a fixed interval (fifteen seconds must elapse since the last lever press before a new lever press will release food). Alternately, you could do it based on a variable ratio of presses (on average, it will take 10 presses to get food, sometimes more, sometimes less), or a variable interval (on average, food is available for pressing a lever every 15 seconds, but sometimes you have to wait longer, sometimes not as long).

A variable ratio schedule is perhaps the most interesting for the example of slot machines. If you make food available on a variable ratio, you can make sure food is given out often enough that the task remains interesting (i.e. the rat doesn't totally give up on pressing the lever), and you can also make it impossible for the rat to guess exactly when reward is coming (so it won't sit there and count to 10 lever presses and expect food; or it won't sit and wait 15 seconds before pressing the lever). Indeed, since the rat only knows it is somewhere in the range of when a reward might come, but doesn't know exactly on which press it is coming, the rat ends up pressing the lever over and over quite steadily. Other reinforcement schedules do not produce as consistent a pattern of behavior (the response curve is not nearly as steep or consistent).

Slot machine designers learned that lesson well and applied it to humans, for whom the same responses appear given a particular reward contingency. By providing payoffs on a variable ratio schedule, they give out money just often enough that people keep playing, and because it happens on average every X times, rather than exactly every X times, the players cannot anticipate when reward is coming (in which case they won't not bother playing when it was not coming). It is possible that any response could be reinforced, so they are less likely to give up. It keeps them in the seat the longest, tugging that lever repeatedly because it always feels like they are on the verge of getting paid off.

The lesson here is not just meant for gamblers. Our modern life is so full of coercive techniques aimed at controlling our behavior (based on principles of learning and conditioning like those mentioned above) that we have come to expect no less. We recognize that television commercials use tricks to convince us to buy products. We recognize that speech writers and marketing/P-R firms perform careful studies to determine how language and word choice contributes to supporting or extinguishing a behavior. These things still affect our behavior, but recognizing coercive techniques is one of our few defenses to avoiding their invisible pull. And so it is worth it for all of us to pick up a little knowledge about the field of learning and behavior analysis, to better understand how our own behavior is conditioned that we might take back as much control as possible.

Slot Machines Are An Example Of Which Type Of Reinforcement Definition

Last Updated: 01-25-07